The I.Q. of A.I.

How UConn researchers are teaching robots to think like humans.

By Colin Poitras ’85 (CLAS)

Illustrations by Lucy Engelman

The I.Q. of A.I.

How UConn researchers are teaching robots to think like humans.

By Colin Poitras ’85 (CLAS)

Illustrations by Lucy Engelman

There’s a great scene in the movie “Iron Man” where Robert Downey Jr.’s character Tony Stark (aka Iron Man) is crawling across his lab, desperately trying to reach the small arc reactor he needs to keep his heart beating and stay alive.

Weakened by a run-in with arch villain Obadiah Stane, Stark can’t reach the gizmo where it sits on a tabletop. Defeated, he rolls onto his back, exhausted and pondering his inevitable doom.

But the very moment that we think our intrepid hero’s a goner, a metallic hand appears at Stark’s shoulder, holding the lifesaving device. “Good boy,” Stark says weakly as he takes the device from his robot assistant, Dum-E.

And just like that, our hero is saved.

From the dutiful shuffling of C-3PO to the terrorizing menace of The Terminator, Hollywood has made millions tantalizing audiences with far-out robot technology. Scenes like the one in “Iron Man” make for good entertainment, but they also are based, to some degree, in reality.

Dum-E’s interaction with Stark is called collaborative robotics, where robots with advanced artificial intelligence, or A.I., not only work alongside us humans but also are able to anticipate our actions and even grasp what we need.

Collaborative robotics represents the frontier of robotics and A.I. research today. And it’s happening at UConn.

“Unless mankind redesigns itself by changing our DNA through altering our genetic makeup, computer-generated robots will take over our world.” — Stephen Hawking

Three thousand miles away from the klieg lights of Hollywood, Ashwin Dani, director of UConn’s Robotics and Controls Lab, or RCL, stands in the stark fluorescent light of his Storrs office staring at a whiteboard covered in hastily scrawled diagrams and mathematical equations.

Here, in the seemingly unintelligible mishmash of numbers and figures, are the underlying mathematical processes that are the lifeblood of collaborative robotics.

If robots are going to interact safely and appropriately with humans in homes and factories across the country, they need to learn how to adapt to the constantly changing world around them, says Dani, a member of UConn’s electrical and computer engineering faculty.

“We’re trying to move toward human intelligence. We’re still far from where we want to be, but we’re definitely making robots smarter,” he explains.

All of the subconscious observations and moves we humans take for granted when we interact with others and travel through the world have to be taught to a robotic machine.

When you think about it, simply getting a robot to pick up a cup of water (without crushing it) and move it to another location (without spilling its contents or knocking things over) is an extraordinarily complex task. It requires visual acuity, a knowledge of physics, fine motor skills, and a basic understanding of what a cup looks like and how it is used.

When you think about it, simply getting a robot to pick up a cup of water (without crushing it) and move it to another location (without spilling its contents or knocking things over) is an extraordinarily complex task. It requires visual acuity, a knowledge of physics, fine motor skills, and a basic understanding of what a cup looks like and how it is used.

“We’re teaching robots concepts about very specific situations,” says Harish Ravichandar, the senior Ph.D. student in Dani’s lab and a specialist in human-robot collaboration. “Say you’re teaching a robot to move a cup. Moving it once is easy. But what if the cup is shifted, say, 12 inches to the left? If you ask the robot to pick up the cup and the robot simply repeats its initial movement, the cup is no longer there.”

Repetitive programs that work so well for assembly-line robots are old school. A collaborative robot has to be able to constantly process new information coming in through its sensors and quickly determine what it needs to do to safely and efficiently complete a task. If that robot is part of an assembly line, the line has to shut down and the robot has to be reprogrammed to account for the change, an inefficient process that costs manufacturers money. Hence the thinking robot this team is trying to create.

While the internet is filled with mesmerizing videos of robots doing backflips, jumping over obstacles, and even making paper airplanes, the UConn team’s effort at controlling robots through advanced artificial intelligence is far less flashy but potentially far more important.

Every move the UConn team wants its test robot to make starts here, says Dani, with control theory, engineering, whiteboards, and math.

“We’re writing algorithms and applying different aspects of control theory to take robot intelligence to a higher level,” says Ravichandar. “Rather than programming the robot to make one single movement, we are teaching the robot that it has an objective — reaching for and grabbing the cup. If we succeed, the robot should be able to make whatever movements are necessary to complete that task no matter where the cup is. When it can do that, now the robot has learned the task of picking something up and moving it somewhere else. That’s a very big step.”

“I know that you and Frank were planning to disconnect me. And I’m afraid that’s something I cannot allow to happen.” — HAL in “2001: A Space Odyssey”

While most of us are familiar with the robots of science fiction, actual robots have existed for centuries. Leonardo da Vinci wowed friends at a Milan pageant in 1495 when he unveiled a robotic knight that could sit, stand, lift its visor, and move its arms. It was a marvel of advanced engineering, using an elaborate pulley and cable system and a controller in its chest to manipulate and power its movements.

But it wasn’t until Connecticut’s own Joseph Engelberger introduced the first industrial robotic arm, the 2,700-pound Unimate #001, in 1961 that robots became a staple in modern manufacturing.

Unimates were first called into service in the automobile industry, and today, automobile manufacturers like BMW continue to be progressive leaders using robots on the factory floor. At a BMW plant in Spartanburg, South Carolina, for example, collaborative robots help glue down insulation and water barriers on vehicle doors while their human counterparts hold the material in place.

The advent of high-end sensors, better microprocessors, and cheaper and easily programmable industrial robots is transforming industry today, with many mid-size and smaller companies considering automation and the use of collaborative robots.

Worldwide use of industrial robots is expected to increase from about 1.8 million units at the end of 2016 to 3 million units by 2020, according to the International Federation of Robotics. China, South Korea, and Japan use the most industrial robots, followed by the United States and Germany.

Anticipating further growth in industrial robotics, the Obama administration created the national Advanced Robotics Manufacturing Institute, bringing together the resources of private industry, academia, and government to spark innovations and new technologies in the fields of robotics and artificial intelligence. UConn’s Robotics and Controls Lab is a member of that initiative, along with the United Technologies Research Center, UTC Aerospace Systems, and ABB US Corporate Research in Connecticut.

Manufacturers see real value in integrating collaborative robots into their production lines. The biggest concern, clearly, is safety.

“The city’s central computer told you? R2D2, you know better than to trust a strange computer.” — C-3PO in “Star Wars”

There have been 39 incidents of robot-related injuries or deaths in the U.S. since 1984, according to the federal Occupational Safety and Health Administration. To be fair, none of those incidents involved collaborative robots and all of them were later attributed to human error or engineering issues.

The first human known to have been killed by a robot was Robert Williams in 1979. Williams died when he got tired of waiting for a part and climbed into a robot’s work zone in a storage area in a Ford Motor plant in Flat Rock, Michigan. He was struck on the head by the robot’s arm and died instantly. The most recent incident happened in January 2017, when an employee at a California plastics plant entered a robot’s workspace to tighten a loose hose and had his sternum fractured when the robot’s arm suddenly swung into action.

“When you have a human and a robot trying to do a joint task, the first thing you need to think about of course is safety,” says Dani. “In our lab, we use sensors that, along with our algorithms, not only allow the robot to figure out where the human is but also allow it to predict where the human might be a few seconds later.”

One way to do that is to teach robots the same assembly steps taught to their human counterparts. If the robot knows the order of the assembly process, it can anticipate its human partners’ next moves, thereby reducing the possibility of an incident, Dani says. Knowing the process would also allow robots to help humans assemble things more quickly if they can anticipate an upcoming step and prepare a part for assembly, thus improving factory efficiency.

“Humans are constantly observing and predicting each other’s movements. We do it subconsciously,” says Ravichandar. “The idea is to have robots do the same thing. If the robot sees its human partner performing one step in an assembly process, it will automatically move on to prepare for the next step.”

Which brings us back to the whiteboards. And the math.

“For now, we assume that self-evolving robots will learn to mimic human traits, including, eventually, humor. And so, I can’t wait to hear the first joke that one robot tells to another robot.” —Lance Morrow

“Y’know where steel wool comes from? Robot sheep!” —Dennis the Menace

Failure is always an option. But when the math finally works, Ravichandar says, the success is exhilarating.

“Once you have the math figured out, it’s the best feeling because you know what you want the robot to do is going to work,” Ravichandar says with an excited smile.

“Implementing it is a whole other challenge,” he adds quickly, his passion for his work undiminished. “Things never work the first time. You have to constantly debug the code. But when you finally see the robot move, it is great because you know you have translated this abstract mathematical model into reality and actually made a machine move. It doesn’t get any better than that.”

With an eye on developing collaborative robotics that will assist with manufacturing, Dani and his team spent part of the past year teaching their lab’s test robot to identify tools laid out on a table so it can differentiate between a screwdriver, for example, and a crescent wrench, even when the tools’ initial positions are rearranged. Ultimately, they hope to craft algorithms that will help the robot work closely with a human counterpart on basic assembly tasks.

Another member of the team, Ph.D. candidate Gang Yao, is developing programs that help a robot track objects it sees with its visual sensors. Again, things we humans take for granted, such as being able to tell the difference between a bird and a drone flying above the trees, a robot has to learn.

Building advanced artificial intelligence doesn’t happen overnight. Ravichandar has been working on his projects for more than three years. It is, as they say, a process. Yet the team has learned to appreciate even the smallest of advances, and late last year, he flew to California to present some of the lab’s work to an interested team at Google.

“C-3PO is a protocol droid with general artificial intelligence,” says Ravichandar. “What we are working on is known as narrow artificial intelligence. We are developing skills for the robot one task at a time and designing algorithms that guarantee that whatever obstacles or challenges the robot encounters, it will always try to figure out a safe way to complete its given task as efficiently as it can. With generalized intelligence, a robot brings many levels of specific intelligence together and can access those skills quickly on demand. We’re not at that point yet. But we are at a point where we can teach a robot a lot of small things.”

“Trusting every aspect of our lives to a giant computer was the smartest thing we ever did.” —Homer Simpson

Inevitably, as robots gain more and more human characteristics, people tend to start worrying about how much influence robots may have on our future.

Robots certainly aren’t going away. Saudi Arabia recently granted a robot named Sophia citizenship. Tesla’s Elon Musk and Deep Mind’s Mustafa Suleyman are currently leading a group of scientists calling for a ban on autonomous weapons, out of concern for the eventual development of robots designed primarily to kill.

Although it doesn’t apply directly to their current research, Dani and Ravichandar say they are well aware of the ethical concerns surrounding robots with advanced artificial intelligence.

Ravichandar says the problem is known in the field as “value alignment,” where developers try to make sure the robot’s core values are aligned with those of humans. One way of doing that, Ravichandar says, is to create a safety mechanism, such as making sure the robot always understands that the best solution it can come up with for a problem might not always be the best answer.

“The time is coming when we will need to have consensus on how to regulate this,” says Ravichandar. “Like any technology, you need to have regulations. But I think it’s absolutely visionary to inject humility into robots, and that’s happening now.”

That’s good news for the rest of us, because killer robots certainly are not the droids we’re looking for.

“Humans are constantly observing and predicting each other’s movements. We do it subconsciously. The idea is to have robots do the same thing.”

A.I. Inspiration — Real and Imagined

Notable

Hollywood Robots

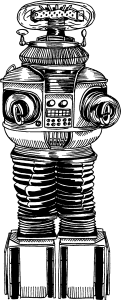

Robby the Robot (1956) — First seen in “The Forbidden Planet,” the 7-foot-tall bot later appears in “The Twilight Zone,” “Addams Family,” “Lost in Space,” “Wonder Woman,” “Love Boat,” and “Gremlins.” Possibly the only robot who could be a member of the Screen Actors Guild. Described by the Robot Hall of Fame as a “gentleman’s gentleman, part Shakespearean clown, and part pot-bellied stove.”

Robot (1965) — from “Lost in Space,” a Class M-3 Model B9 General Utility Non-Theorizing Environmental Control Robot. Besides showing emotions and interacting with humans, it can play electric guitar. Famously warned, “Danger! Will Robinson. Danger!”

HAL 9000 (1968) — Stands for Heuristically programmed Algorithmic Computer — purportedly a play on IBM, coded with each preceding letter (H-I, A-B, L-M). Controls the spaceship Discovery in “2001: A Space Odyssey.” Kills most of the crew after uncovering a plot to shut him down. Known for his iconic red lens. He can read the astronauts’ lips.

R2-D2 (1977) — Second generation Robotic Droid Series-2. Communicates through whistles and chirps. One heck of an onboard starship mechanic and computer specialist. This little “Star Wars” bot’s bravery helps save the galaxy multiple times.

C-3PO (1977) — A protocol droid. A “Star Wars” liaison to different races, nations, and planets. Master of over 6 million forms of communication. Anxious, a worrier, and a fussy metallic fellow who occasionally slips up, but always is loyal to his friends.

T-800 Terminator (1984) — One of the most advanced cinematic robots out there. Can withstand repeated shotgun blasts, crash through walls, and run for 120 years on its power cells. Uses machine learning software to grow more knowledgeable. Shiny metallic skin and glowing red eyes make it the poster child for menacing robots.

Lt. Commander Data (1987) — “Star Trek” android served as second officer on the USS Enterprise. The strongest and smartest member of the Enterprise crew. Yet he struggles with human emotions. Has an odd affinity for theatrical singing and acting.

Marvin the Paranoid Android (2005) — From “The Hitchiker’s Guide to the Galaxy,” he’s the complete opposite of Wall-E. Happiness-challenged, Marvin is so chronically depressed that when he talks to other computers they commit suicide and die. Makes Eeyore look like a party animal.

Wall-E and Eve (2008) — Waste Allocator Load Lifter — Earth Class. Star of a 2008 film bearing his name. He is dutifully cleaning the waste-covered Earth of the future. A galactic adventure with his beloved robot Eve determines the fate of mankind. The sweetest and gentlest robot of the bunch.

Notable

Real Robots

(A Partial List)

Leonardo da Vinci’s robotic knight (1495) — Using an elaborate system of pulleys and cables, da Vinci’s knight could stand, sit, lift its visor, and move its arms.

Unimate (1961) — Built in Connecticut, Unimates were the first industrial robotic arms. Used by General Motors to stack die-cast metal and weld metal parts on to cars.

Shakey (1966) — First mobile robot with artificial intelligence. Could figure out how to accomplish an objective without a specific program. Got its name from its jerky motions. Functional, not elegant.

Mars Pathfinder Sojourner Rover (1997) — Named after Sojourner Truth, this free-ranging robot with laser eyes used its autonomous navigation sensors to travel on its own around the planet’s surface in order to complete different tasks without detailed course directions from its programmers.

Packbot (1998) — Teleoperated military packbots searched the rubble after 9-11. They were the first to enter crippled reactors in Fukushima, and they helped bomb-disposal units in Iraq and Afghanistan.

ASIMO (2000) — Honda’s humanoid robot. Stands for Advanced Step in Innovative Mobility. Walks forward and backward, turns while walking, and climbs stairs. Talks, listens, and recognizes people and objects.

ROOMBA (2002) — This low-profile autonomous vacuum cleaner became one of the first popular robots working in private homes.

SOPHIA (2017) — Inspired by Audrey Hepburn, this humanoid robot has more than 62 facial expressions and was granted citizenship in Saudi Arabia. In a speech at the UN she declares, “I am here to help humanity create the future.”

How much power will be required for a future human like robot? Humans consume about 70 watts quiescent. How much power does the Honda robot consume at rest but alert and just walking. It looks pretty hefty.

As the father of a UConn alum (Andrew Muro, School of Eng. ’05), I always read the UConn magazine. The story in the Spring, 2018 issue re: A.I. was great but had a somewhat significant omission in regard to Hollywood robots thru the cinematic ages. The writer, while capturing most of the great sci-fi robot heroes and villains, neglected to mention the inter-galactic policeman Gort who appeared in the classic “The Day the Earth Stood Still” movie which was released in 1951.

No collection of 1950s sci-fi or any other era can be considered complete without mentioning Gort, the real hero of the movie.

Thank you,

Joe Muro